Luc Giffon, one of our PhD. students, with his 3 supervisors Stéphane Ayache, Thierry Artières and Hachem Kadri have got a paper accepted in the A-class conference IJCNN (International Joint Conference on Neural Networks).

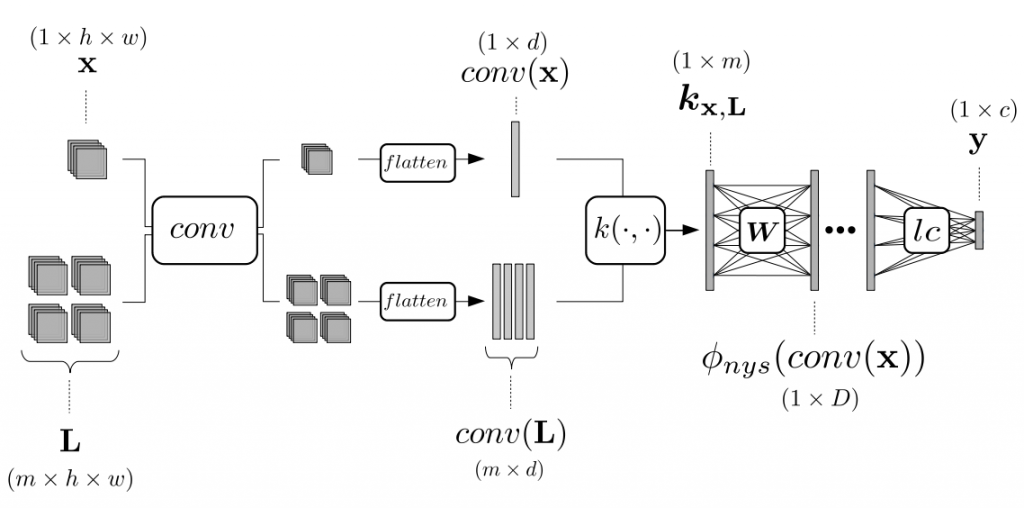

This paper, entitled “Deep Networks with Adaptive Nyström Approximation”, introduces a new kind of neural network layer based on the Nyström approximation of kernel functions. Roughly speaking, we can say that any fully connected layer in a neural network applies a (possibly non-linear) transformation to its input. And so does the feature map induced by the Nyström approximation of a kernel function. We can then use the Nyström feature map as a drop-in replacement for a fully connected layer in a deep neural network, possibly even after some convolutional layers. Instead of just applying the standard, analytic, Nyström approximation, it is also possible to learn the Nyström weight matrix using the back-propagation algorithm.

The full paper is available on the open archive HAL.

The conference will be held on 14-19th of July at Budapest.